AMD has been defying the laws of probability given how they’ve managed to get several successful product launches over the past three years. The key takeaway from these launches being the significant performance increments between launches, that too, tangible ones. With the latest AMD Ryzen 5000 series (Ryzen 9 5950X and 5900X) CPUs, the company has even managed to equal Intel’s 10th Gen processors in a lot of aspects and the same is expected with the ‘RDNA 2’-based Radeon RX 6000 series. NVIDIA’s RTX GPUs enjoy the lion’s share of the market and the Radeon RX 6000 series GPUs are expected to help AMD grab a larger piece of the pie. Today’s launch includes the Radeon RX 6800 XT and the RX 6800 which are both high-end GPUs and the only GPU left to be launched is the Radeon RX 6900 XT which is supposed to launch in December.

RDNA 2 brings several new features to the table which have significant performance implications. The Compute Units have been changed to incorporate a new render pipeline which also include hardware-accelerated ray-tracing capabilities that only NVIDIA had for the last generation. There is also the new Smart Memory Access feature which helps the CPU address larger chunks of GPU memory and thereby, improve performance. However, this is made possible because of the resizable BAR feature that’s part of the PCIe specification and can be easily replicated by NVIDIA via software updates. Then there’s Direct X 12 Ultimate support which again brings the feature set of AMD in the same league as NVIDIA’s. So the competition is quite real and intense. All that’s left on the table is driver stability and AIB supply. We’ll get to hear a lot more about these once the cards hit the shelves. We didn’t face much issues with the drivers aside from some glitches which have all been already identified by AMD. Let’s take a closer look at the specifications below.

AMD Radeon RX 6800 XT / 6800 Specifications

The AMD Radeon RX 6000 series GPUs use the same 7nm process node so one wouldn’t expect much power savings. TSMC has been working on improving the node so some of those improvements will result in minor efficiency improvements. AMD’s architectural enhancements contribute to the rest of the performance gains. We’ll take a close look at that later. The two cards that we have today are using the same GPUs going by the core configurations. The performance difference is brought about by the number of active Compute Units. We’ve seen cross-flashing of firmware in the past and that could very well work in this case as well. We haven’t cross-flashed the firmware on the GPUs yet, so we can’t confirm the same.

The key difference between the Radeon RX 6800 XT and the RX 6800 comes down to 12 Compute Units. Even the video memory has been retained across the two GPUs so you’re never going to be constrained for video memory and two cards should easily handle ridiculous resolutions as long as there is enough horsepower within the GPU to back them up. RDNA 2 now has one Ray Accelerator per CU, so there should be a distinct difference in ray-tracing performance between the two cards. How that compares to NVIDIA will be explored in the performance section.

With the new set of benchmarks, we’ve featured some of the older GTX 10 series cards along with most of the RTX 20 series cards including the Super cards. Last year’s AMD RX 5000 series cards are present to help gauge the generational improvement of RDNA2 against RDNA. Also included are the new RTX 30 cards so you can get a good idea of how the RDNA2 cards go up against NVIDIA’s latest GPUs. And since the launch windows of the two cards are overlapping, this list couldn’t have been more even. We have removed the older AMD Vega cards since we haven’t retested them in a long time and a lot of driver-related performance improvement cannot be gauged without a retest. Like always, we have a section for synthetic benchmarks as well as gaming benchmarks with plenty of charts to showcase differences. Coming to the rig, we’ve had to test the cards on Intel as well as AMD processors considering the inclusion of the new Smart Memory Access feature. We’ve tried to keep the rigs as similar as we could. Here are the two rigs that we’re running our benchmarks on.

INTEL RIG

Processor – Intel Core i9-10900K

CPU Cooler – Corsair H115i RGB PLATINUM

Motherboard – ASUS ROG MAXIMUS XII EXTREME

RAM – 2x 8 GB G.Skill Trident Z Royal 3600 MHz

SSD – Kingston KC2500 NVMe SSD

PSU – Cooler Master V1200

AMD RIG

Processor – AMD Ryzen 9 5950X / 5900X

CPU Cooler – Corsair H115i RGB PLATINUM

Motherboard – ASUS ROG CROSSHAIR VIII

RAM – 2x 8 GB G.Skill Trident Z Royal 3600 MHz

SSD – WD SN550 Blue 1 TB NVMe SSD

PSU – Cooler Master V1200

Graphics cards

AMD RX 6800 XT

AMD RX 6800

RTX 3090

RTX 3080

RTX 3070

RTX 2080 Ti

RTX 2080 Super

RTX 2080

RTX 2070 Super

RTX 2070

RTX 2060 Super

RTX 2060

GTX 1080 Ti

GTX 1080

AMD RX 5700 XT

AMD RX 5700

AMD RX 5600 XT

Some of these cards were sourced from friends owing to the fact that the current lockdown situation has made it extremely difficult to get graphics cards in time for the benchmarks. Let’s start with the synthetic benchmarks.

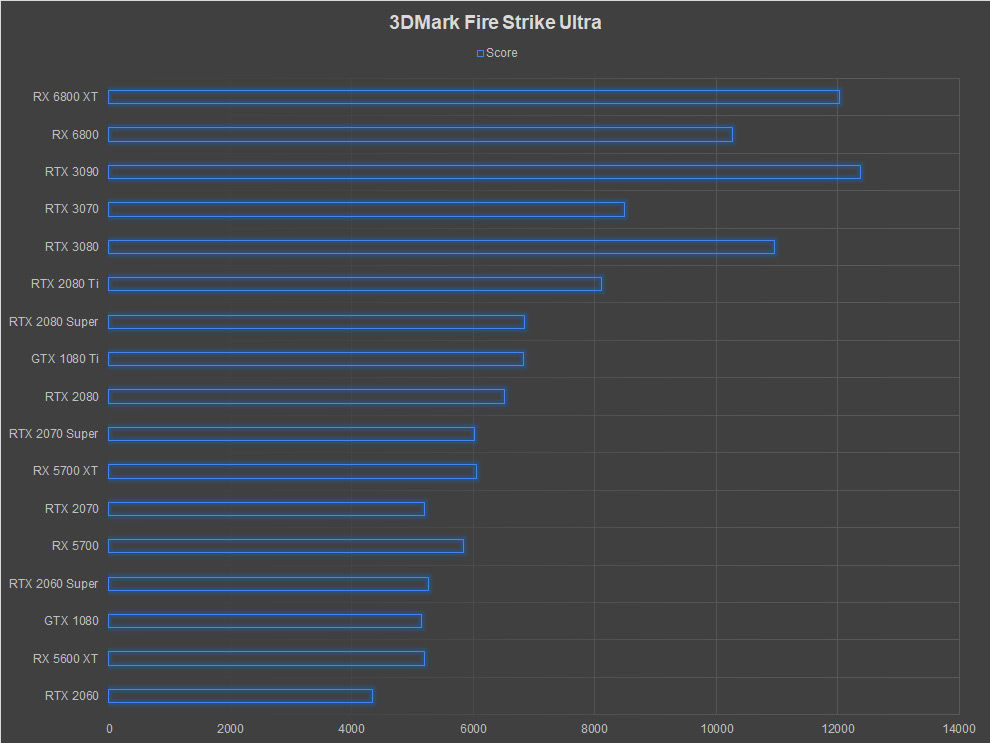

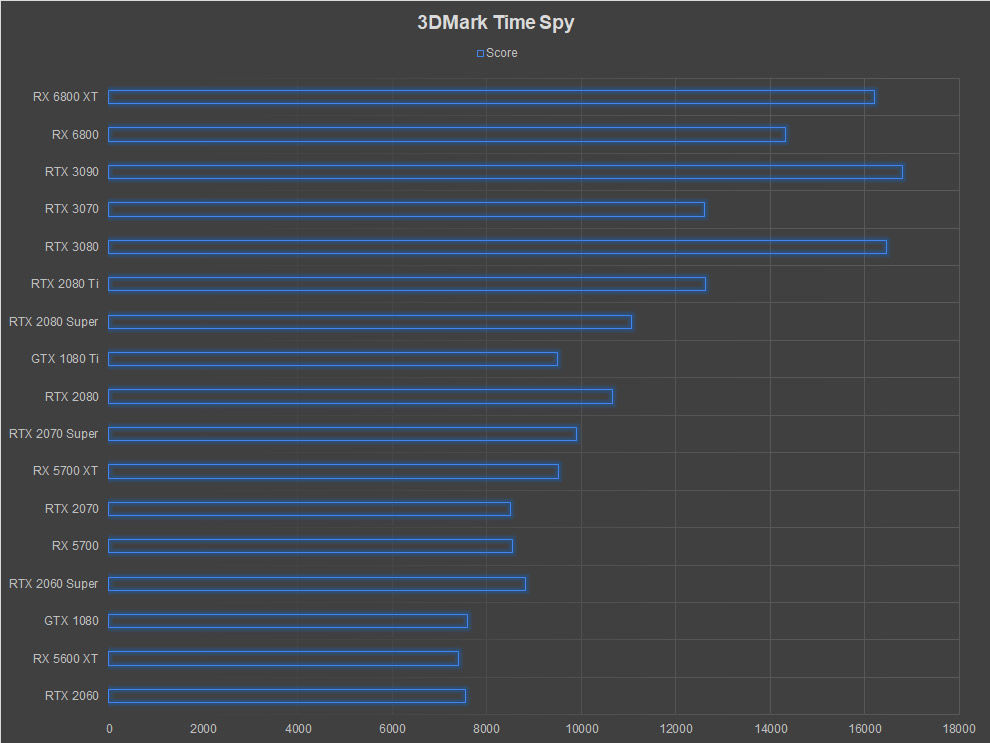

3DMark

In 3DMark, we prefer the Fire Strike Ultra benchmark since the Extreme and normal runs have started producing ridiculous scores with newer GPUs. The other benchmark within 3DMark which we use is Time Spy and we run both, the normal run and the extreme run. Here, we’re showcasing Fire Strike Ultra and Time Spy scores. The Radeon RX 6800 XT comes really close to the NVIDIA RTX 3090. Considering that the latter is a $1,499 card, this is a huge performance gain. The Radeon RX 6800 isn’t that far behind. The RTX 3080 scores more than the RX 6800 but it also costs a lot more.

Time Spy replicates the same results. The RTX 3090 is still at the very top of the scoreboard with the RTX 3080 in tow and the RX 6800 XT right behind it. Then comes the RX 6800 and after a decent gap lies the RTX 3070.

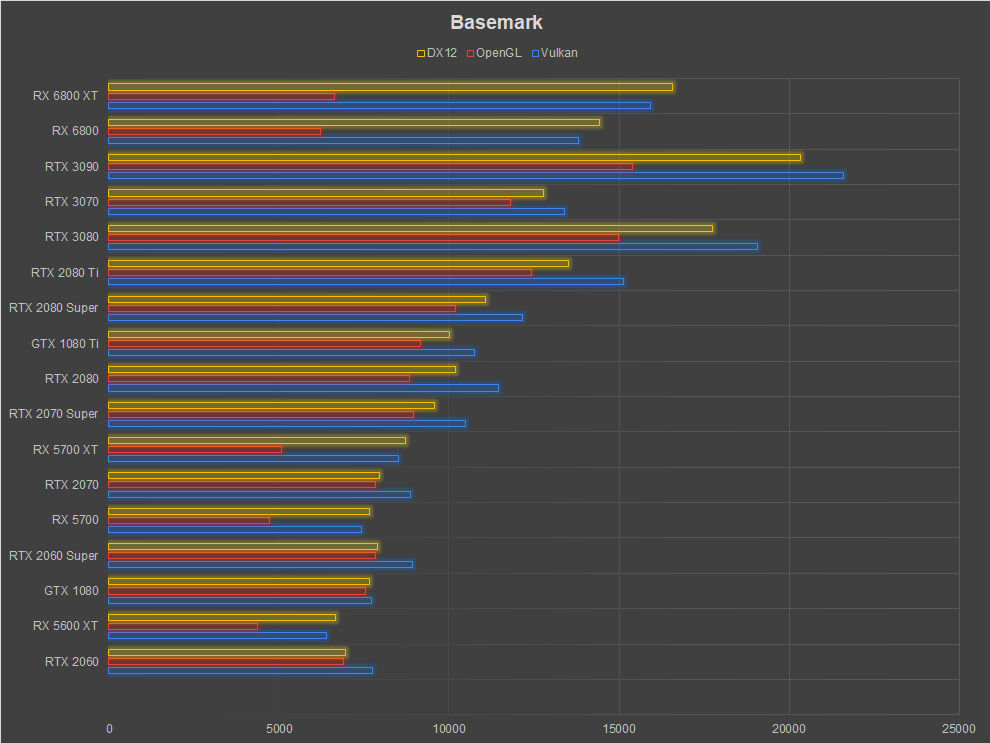

Basemark GPU

Basemark GPU is a nice benchmark to compare the performance of different graphics APIs between cards. We can use the same textures with OpenGL, Vulkan and DirectX 12 to see if the graphics card excels at any particular API more than the rest or if the performance is consistent across the board. The RX 6800 comes third, behind the RTX 3090 and the RTX 3080. NVIDIA cards have a clear advantage in this benchmark and the gap between the RTX 3070 and the RX 6800 has narrowed. What stood out for us was the OpenGL performance as the RDNA 2 cards tanked compared to the competition and are showing only a meagre generational improvement.

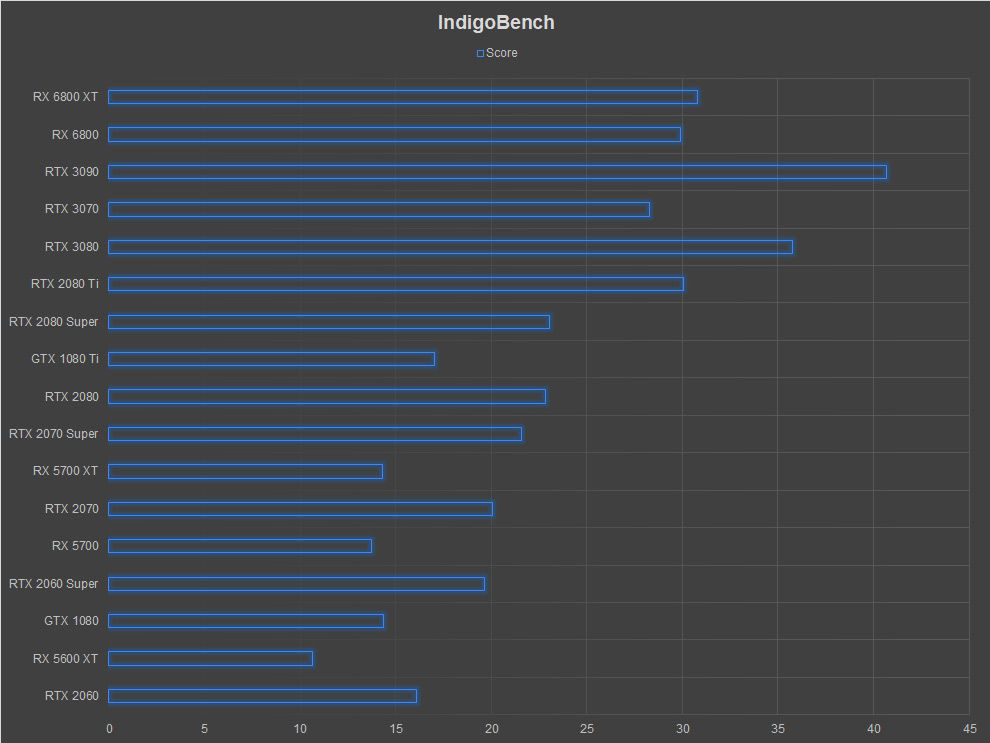

IndigoBench

This is the latest synthetic to be added to our test suite. IndigoBench is based on the Indigo 4 rendering engine that’s available for popular software such as SketchUp, Blender, 3ds Max, Cinema 4D, Revit and Maya. It uses OpenCL and can benchmark both, the CPU and GPU together or individually. We prefer to test just the GPU with the software and the score provided is in terms of .M samples/s’. The RTX 3090 and the RTX 3080 maintain a lead here whereas the RX 6800 XT and RX 6800 come in at the third and fourth positions. Performance difference between the RX 6800 XT and RX 6800 is also quite narrow.

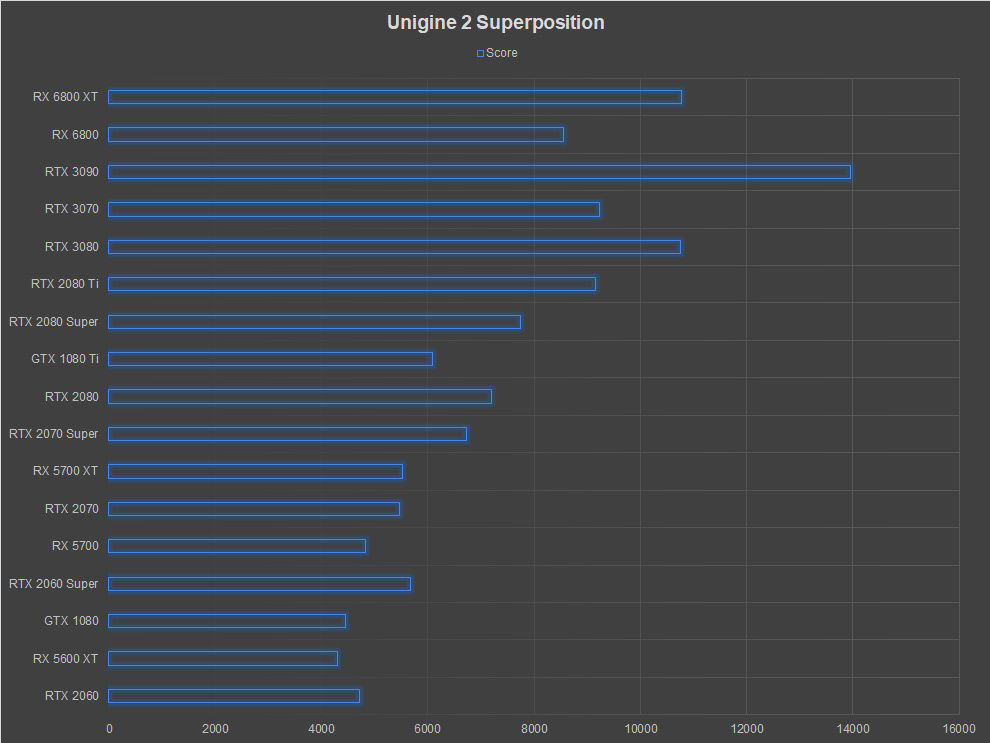

Unigine SuperPosition

Unigine SuperPosition is the other popular DX11 benchmark which we prefer to use alongside 3DMark. We run it on the Extreme Quality preset and use the score metric for delineating the performance between graphics cards. The NVIDIA cards retain the lead again but with a much narrow margin. The RX 6800 XT and the RTX 3080 are almost equivalent in this benchmark.

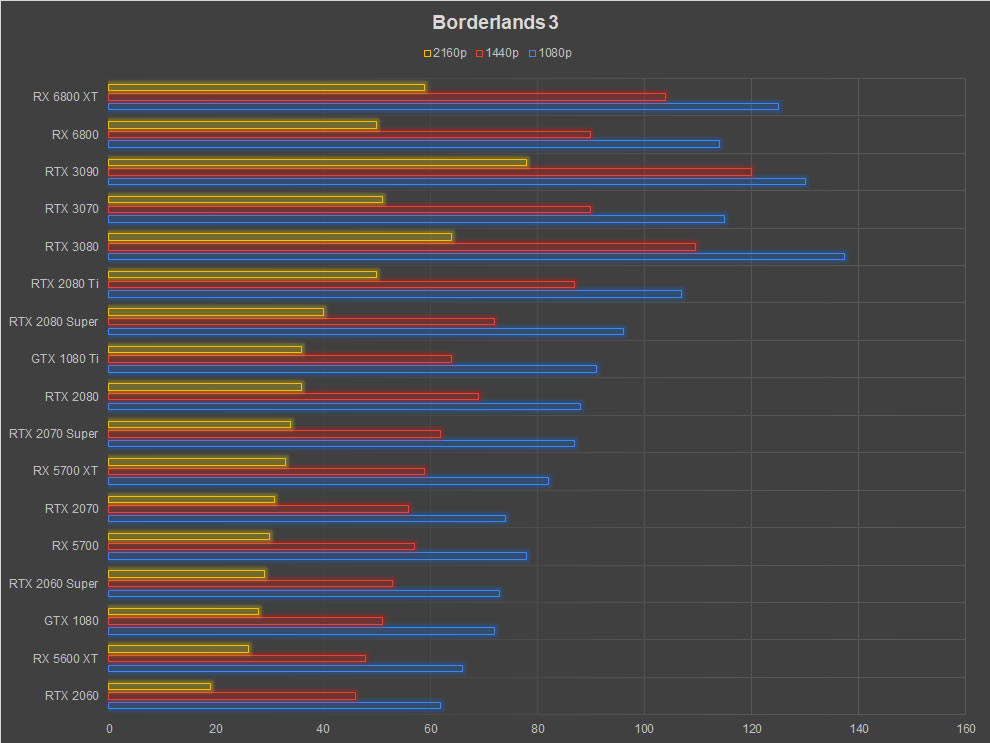

Borderlands 3

Gearbox’s latest game in the Borderlands franchise which uses Unreal Engine 4 and allows you to switch between DirectX 11 and 12. The game has an inbuilt benchmark which takes you through an array of stressful scenarios. The DirectX 12 version is quite flaky and we prefer to use the DirectX 11 API while benchmarking the game and all our existing data is based off the DX11 version so there’s no point switching now.

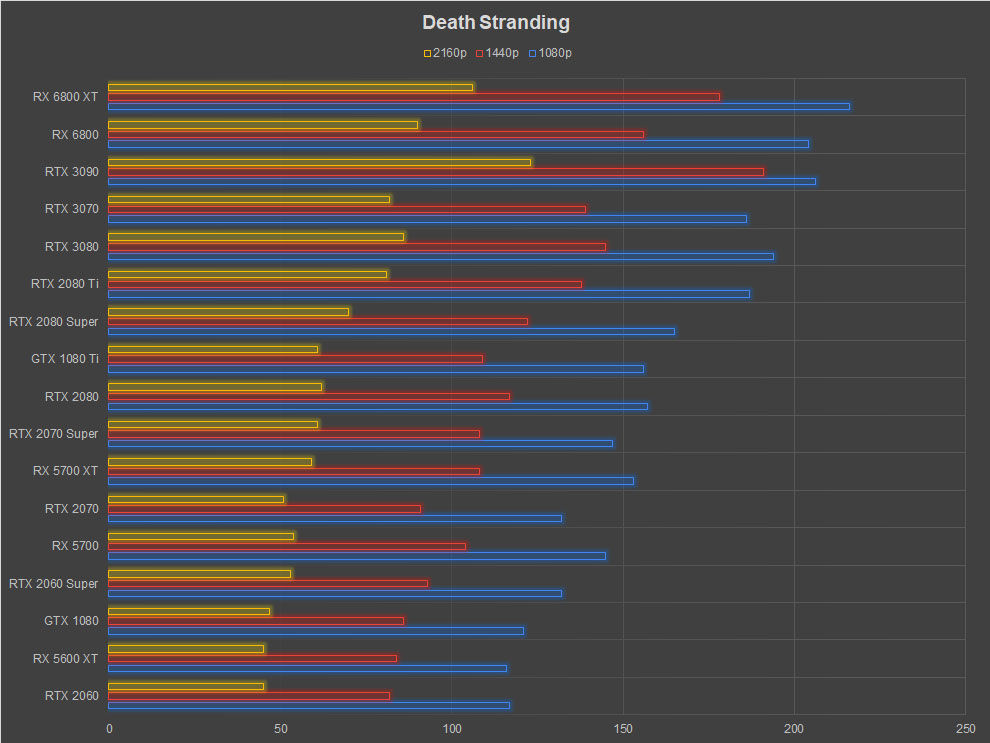

Death Stranding

Hideo Kojima’s Death Stranding has been a recent addition to the PC scene and had been a PlayStation exclusive until July 2020. Being a console game, the game engine is quite optimised to make use of the scarce resources one gets with consoles. However, when 505 Games ported the game to PC, you wouldn’t end up calling the game a console port. The Decima game engine is capable of rendering up to 4K and can use high dynamic-range imaging and is also geared for the upcoming next-gen PlayStation console. It’s also the same engine used by Horizon Zero Dawn.

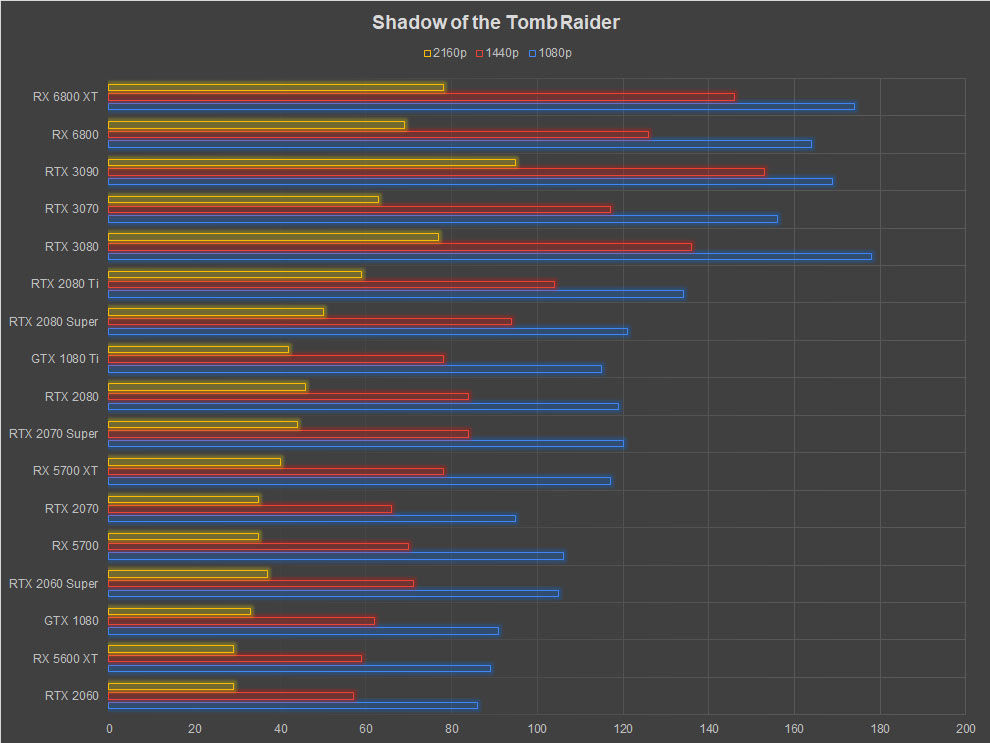

Shadow of the Tomb Raider

We run Shadow of the Tomb Raider in DirectX12 mode. It ends up consuming a little more memory as most games that support both DirectX 11 and DirectX 12. The preset is set to the highest quality and HBAO+ enabled. The ingame benchmark tool takes us through several scenes which feature open spaces as well as closed spaces with lots of world detail aside from the central character.

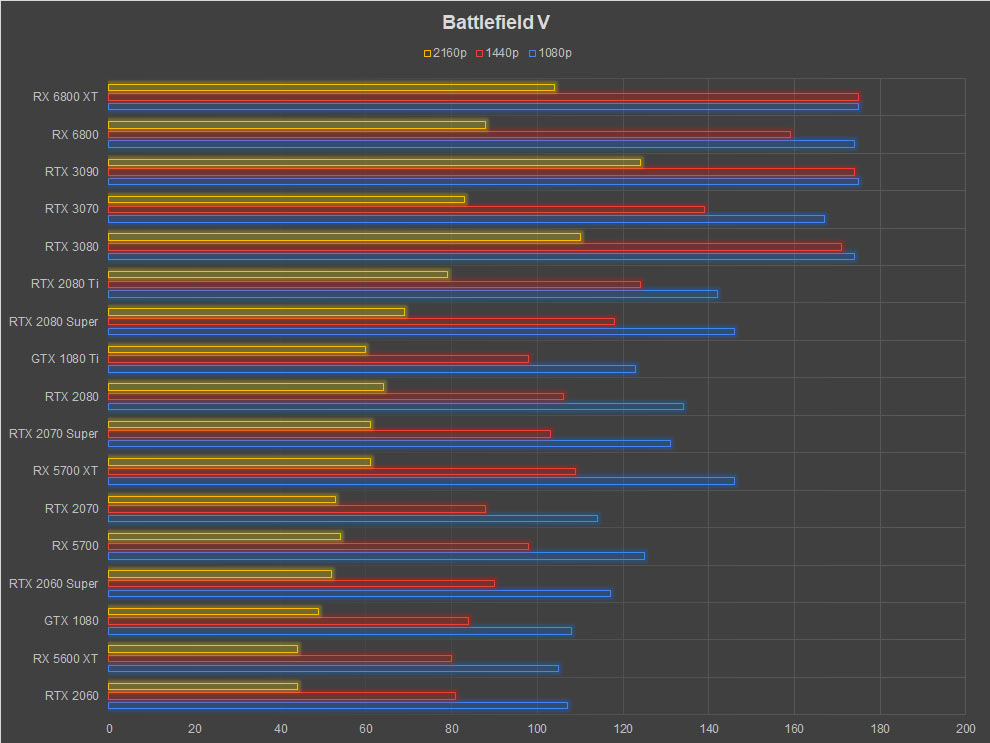

Battlefield V

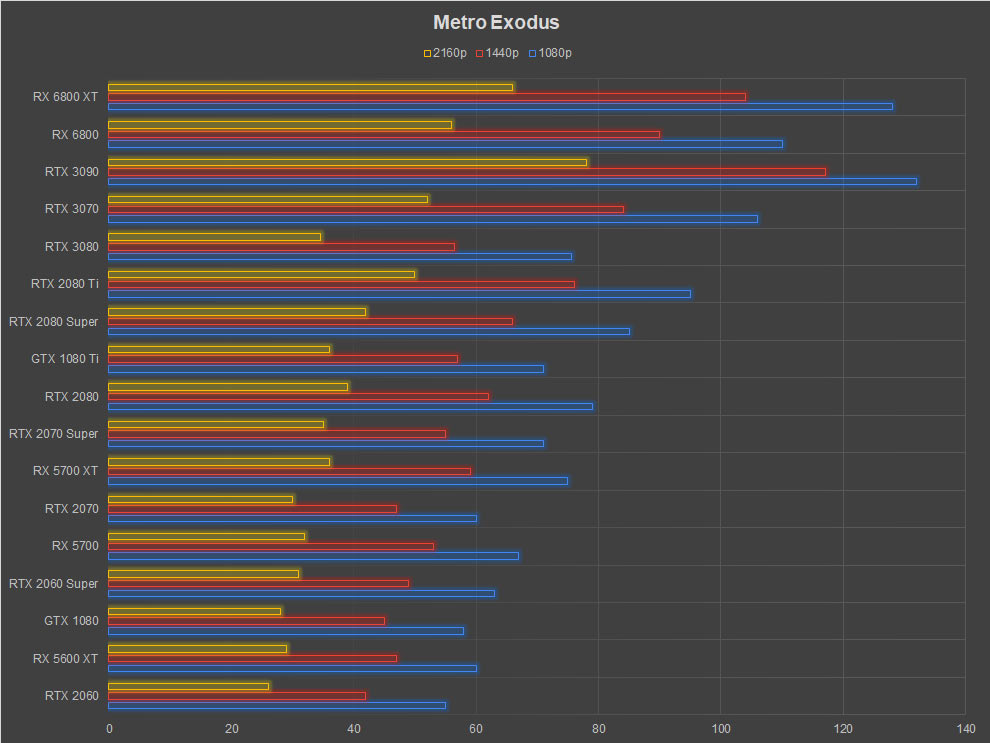

Metro Exodus

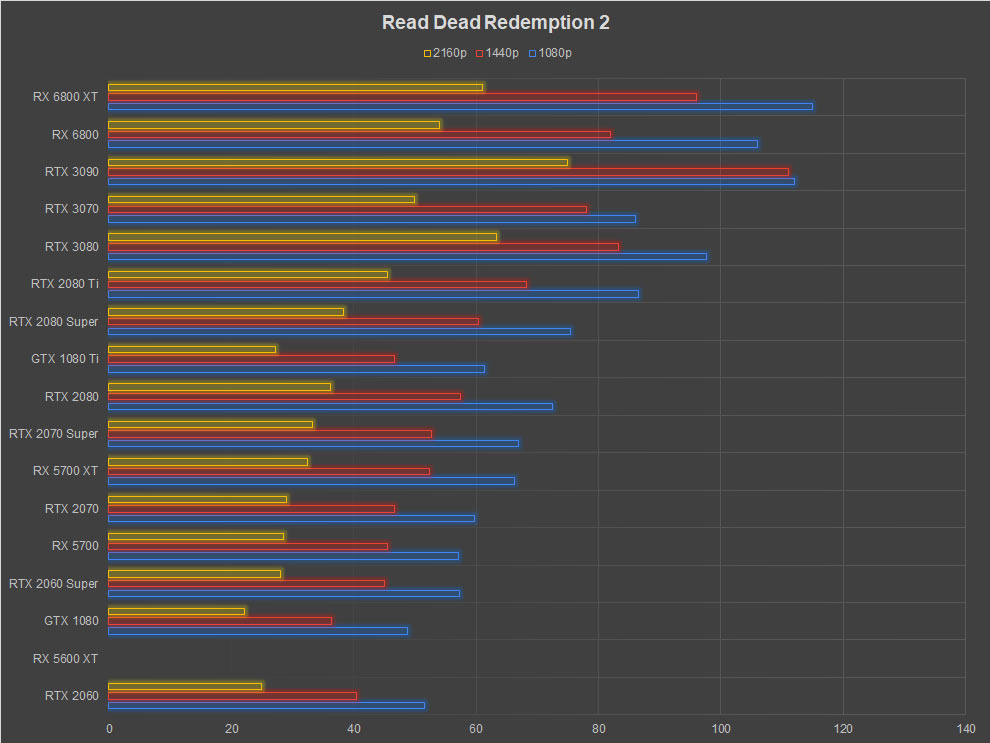

Red Dead Redemption 2

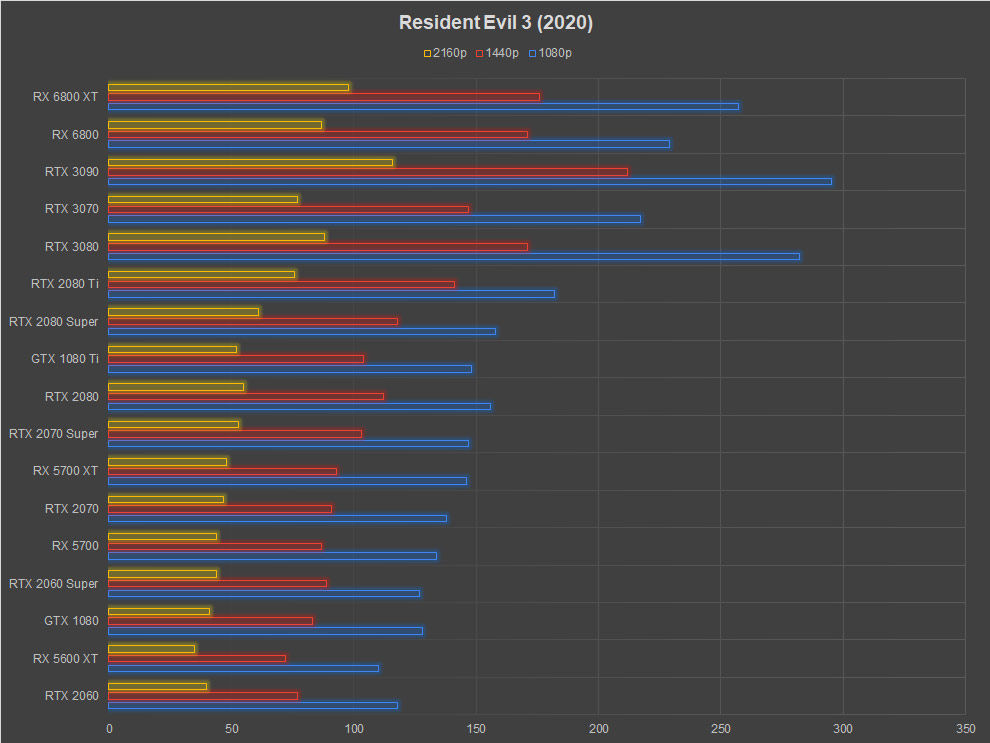

Resident Evil 3 (2020)

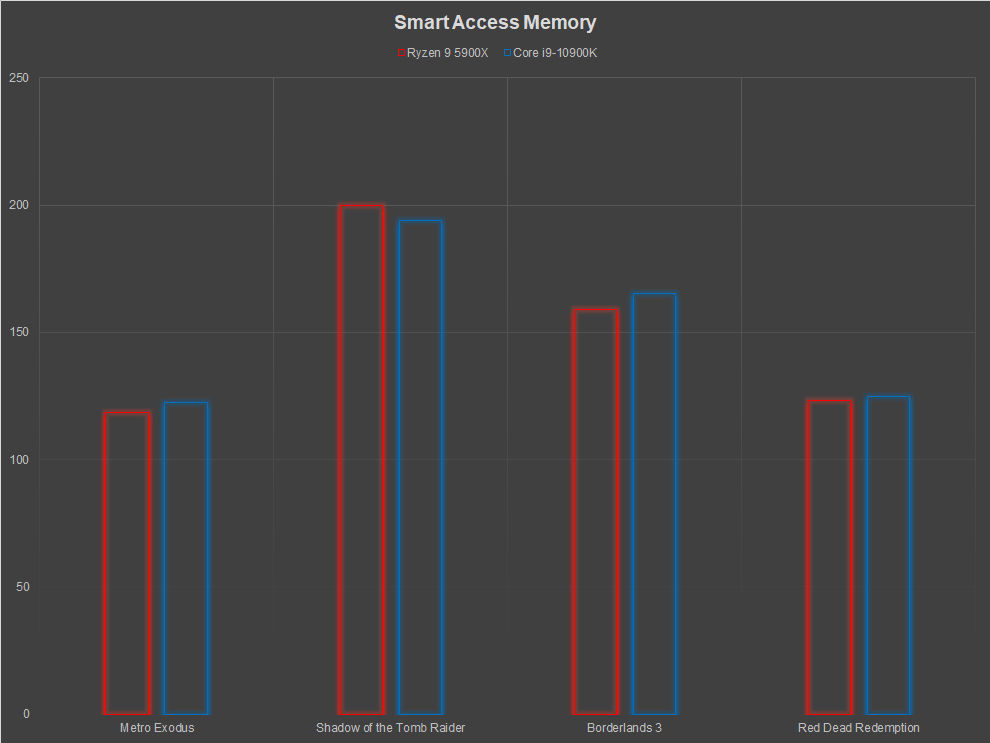

Smart Access Memory (AMD+AMD vs AMD+Intel)

We’ve already touched upon the PCIe standard feature that allows the CPU to access a larger chunk of the GPU VRAM. With a few tweaks in the BIOS on supported boards, you can switch on the Smart Access Memory feature and that’s supposed to give the AMD processors a nice advantage over Intel processors. We tested the feature across four video games and found that it could go either way.

Shadow of the Tomb Raider was the only game in which we saw AMD processors gain the advantage while in Metro Exodus, Borderlands 3 and Red Dead Redemption 2, we saw Intel retaining its lead. We understand that four games is a little too less to get the full…

Fuente: Digit